If you are using Hardal to measure your first-party data (1PD), you can import it to BigQuery. This way you can use BigQuery to analyze your data.

⚠️ Disclaimer: Make sure your law regulations allow you to import your first-party data to BigQuery. See GDPR and Google Cloud Privacy Policy here.

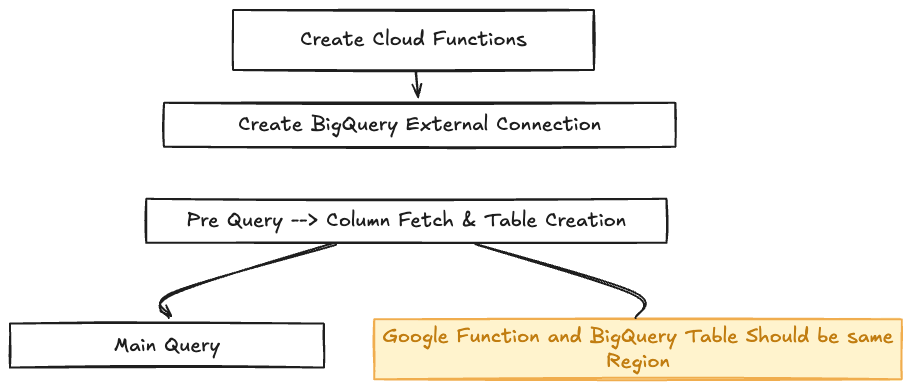

We will use Cloud Function and BigQuery External Connection to import your data to BigQuery.

Step-by-step guide:

First, you need to create a data set on Hardal Data Hub.

- Go to your Hardal project dashboard, here and click on your signal.

- Click on "Analytics" > "Data Hub" and create a new data set. You can learn more about Data Hub here.

Once you create a data set, you will receive a report table name like this:

report_<your-signal-id>_<your-report-table-name>_<your-report-table-id>

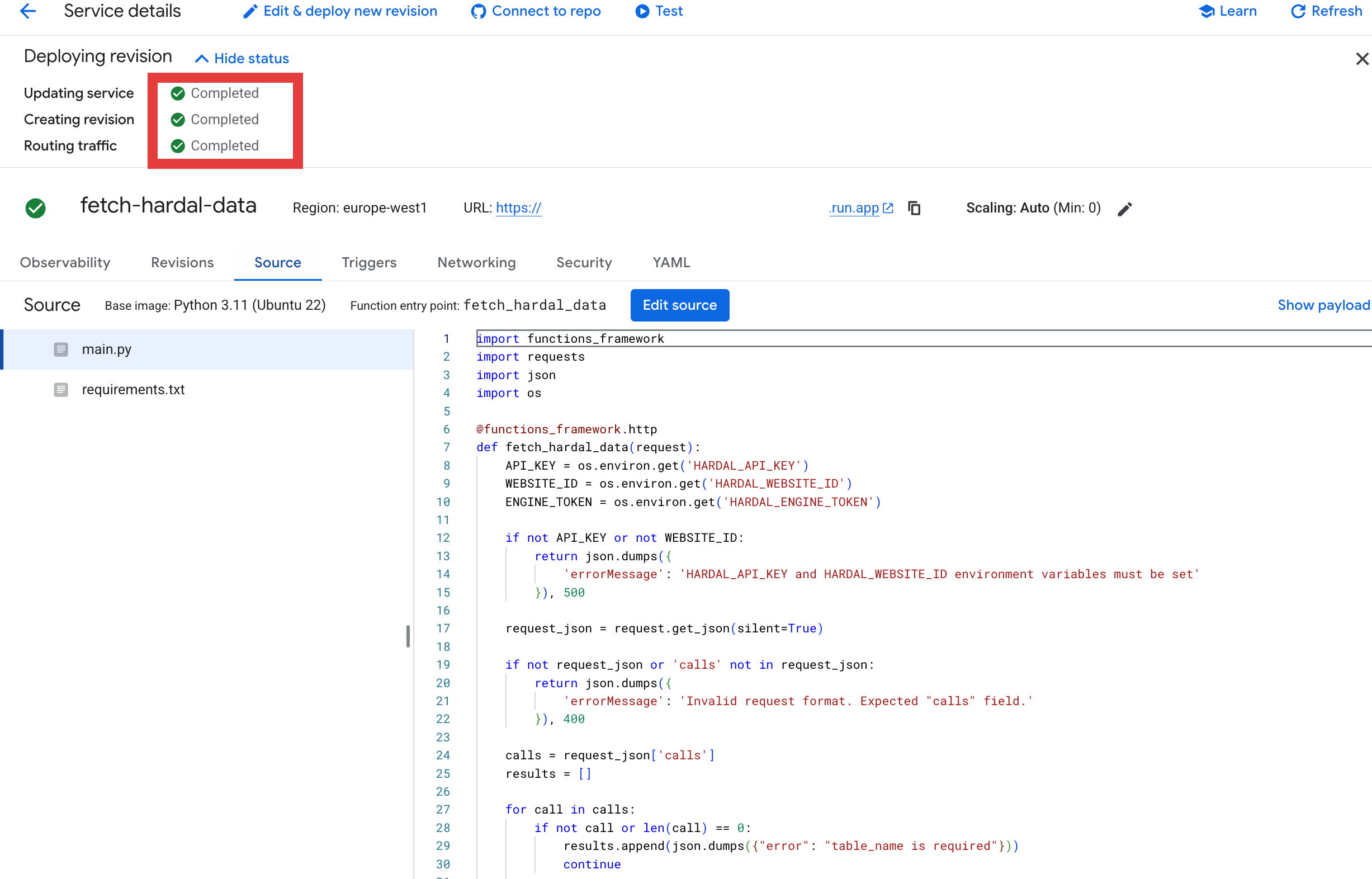

1. Go to you BigQuery project and create a new Cloud Function.

main.py

import functions_framework

import requests

import json

import os

@functions_framework.http

def fetch_hardal_data(request):

API_KEY = os.environ.get('HARDAL_API_KEY')

WEBSITE_ID = os.environ.get('HARDAL_WEBSITE_ID')

ENGINE_TOKEN = os.environ.get('HARDAL_ENGINE_TOKEN')

if not API_KEY or not WEBSITE_ID:

return json.dumps({

'errorMessage': 'HARDAL_API_KEY and HARDAL_WEBSITE_ID environment variables must be set'

}), 500

request_json = request.get_json(silent=True)

if not request_json or 'calls' not in request_json:

return json.dumps({

'errorMessage': 'Invalid request format. Expected "calls" field.'

}), 400

calls = request_json['calls']

results = []

for call in calls:

if not call or len(call) == 0:

results.append(json.dumps({"error": "table_name is required"}))

continue

table_name = call[0]

all_data = []

offset = 0

limit = 1000

page_count = 0

while True:

url = f'https://engine.usehardal.com/analytics/report-tables/{table_name}/data'

params = {

'signal_id': WEBSITE_ID,

'signal_token': API_KEY,

'limit': limit,

'offset': offset

}

headers = {

'Authorization': f'Bearer {ENGINE_TOKEN}',

'Content-Type': 'application/json'

}

try:

response = requests.get(url, headers=headers, params=params, timeout=60)

response.raise_for_status()

data = response.json()

page_count += 1

current_page_size = len(data.get('data', []))

print(f"Page {page_count}: offset={offset}, received={current_page_size} rows")

if 'data' in data and current_page_size > 0:

all_data.extend(data['data'])

if current_page_size >= limit:

offset += limit

else:

print(f"Last page reached. Total rows: {len(all_data)}")

break

else:

print(f"No data on page {page_count}")

break

except requests.exceptions.Timeout:

results.append(json.dumps({"error": "Request timeout"}))

break

except requests.exceptions.RequestException as e:

results.append(json.dumps({"error": f"API error: {str(e)}"}))

break

except Exception as e:

results.append(json.dumps({"error": f"Unexpected error: {str(e)}"}))

break

results.append(json.dumps({

"data": all_data,

"total": len(all_data),

"pages_fetched": page_count,

"table_name": table_name

}))

return {'replies': results}, 200, {'Content-Type': 'application/json'}

Make sure to save the function and deploy it. 👇🏻

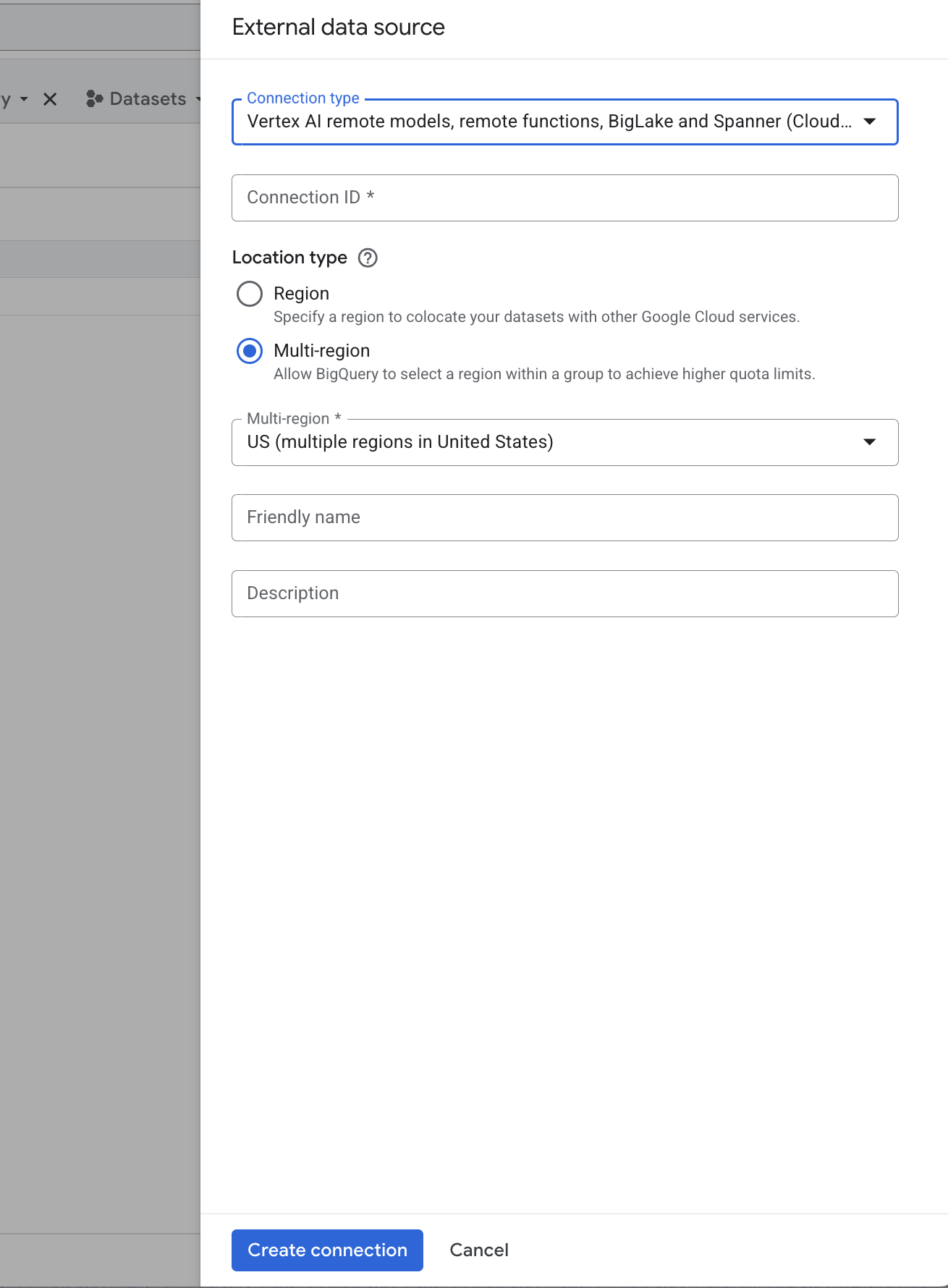

2. Create a new BigQuery External Connection

Go to Connections and create a new External Connection.

- Connection Type: Vertex AI remote models, remote functions.

- Connection ID:

<your-any-connection-id> - Region: (Same region as Cloud Function and BigQuery Tables)

3. Sample Row Query

Use this query to see the sample row of the data.

SELECT

JSON_QUERY(`<your-project-id>.<your-dataset-id>.<your-table-id>.fetch_hardal_data`(

'<your-report-table-name>'

), '$.data[0]') as sample_row;

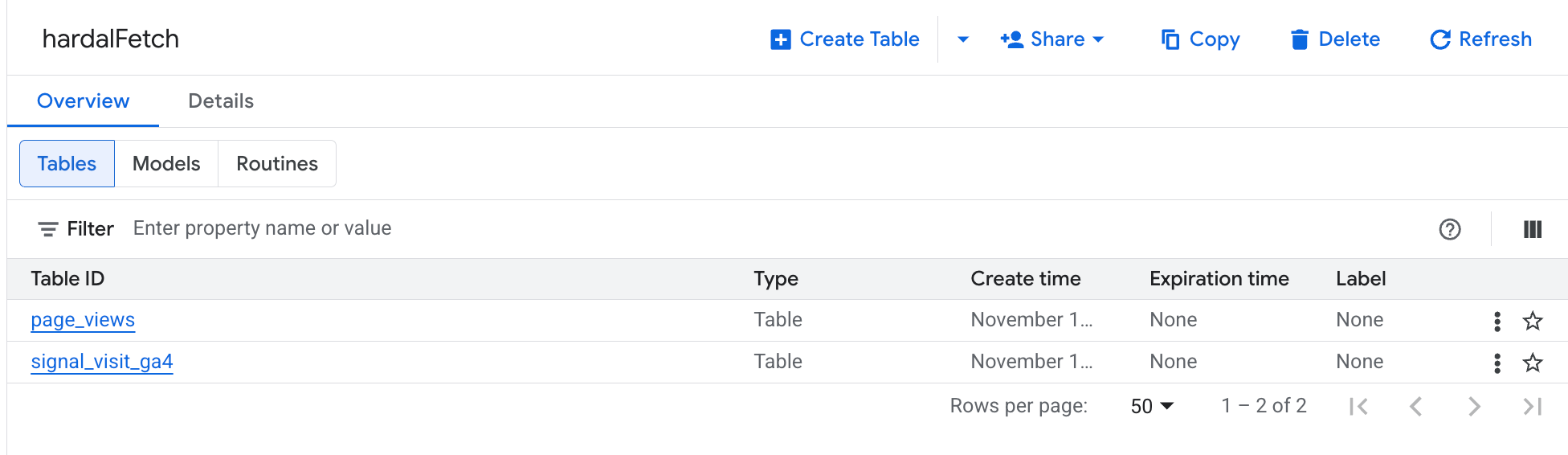

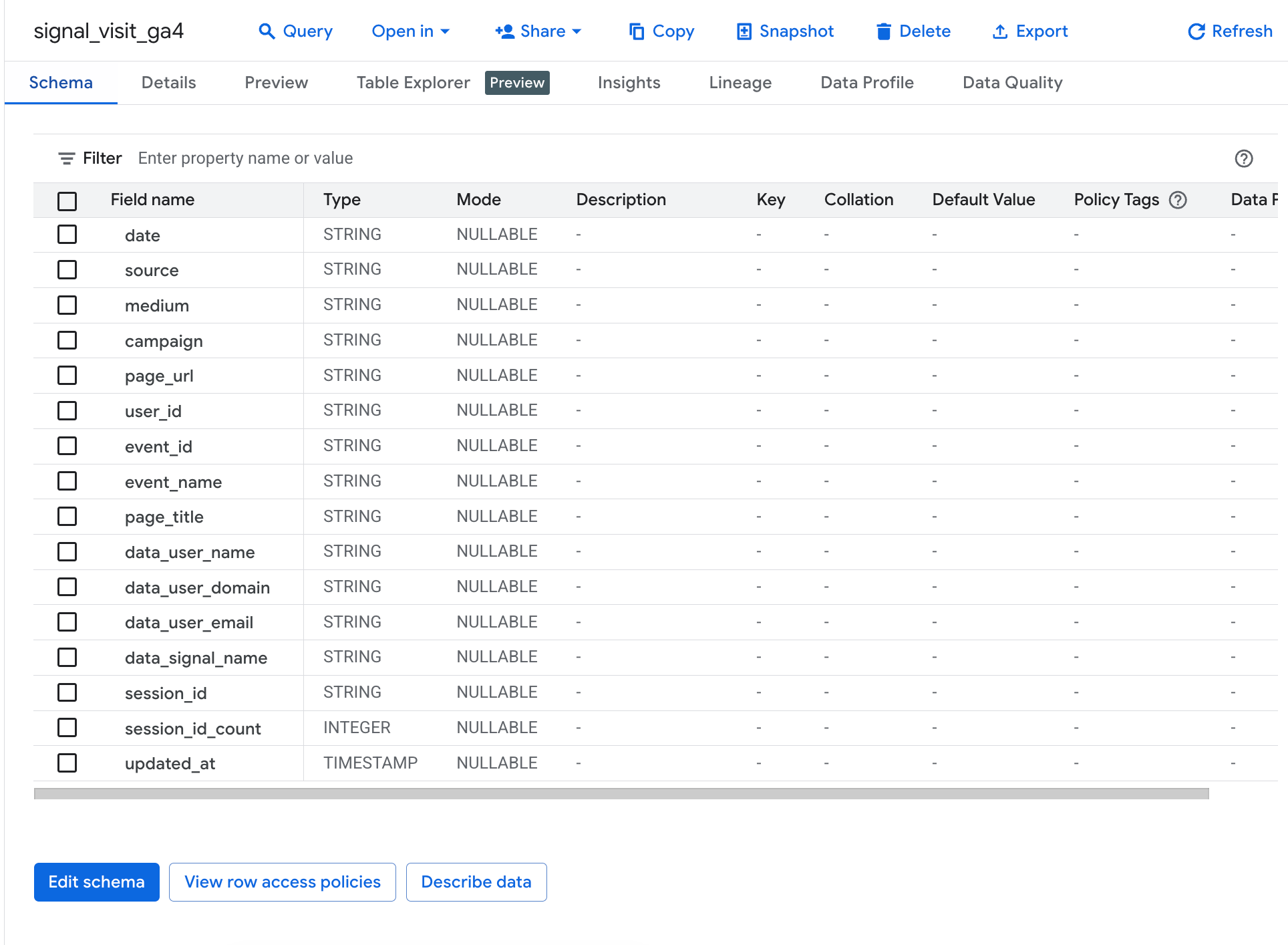

4. Create a new table in BigQuery.

Remember: ⚠️ Function and BigQuery Table must be in the same region.

Use above query to see your data structure and create a new table in BigQuery.

CREATE OR REPLACE TABLE `<your-project-id>.<your-dataset-id>.<your-table-id>` AS

SELECT

JSON_VALUE(item, '$.date') as date,

JSON_VALUE(item, '$.source') as source,

JSON_VALUE(item, '$.medium') as medium,

JSON_VALUE(item, '$.campaign') as campaign,

JSON_VALUE(item, '$.page_url') as page_url,

JSON_VALUE(item, '$.user_id') as user_id,

JSON_VALUE(item, '$.event_id') as event_id,

JSON_VALUE(item, '$.event_name') as event_name,

JSON_VALUE(item, '$.page_title') as page_title,

JSON_VALUE(item, '$."Data.user_name"') as data_user_name,

JSON_VALUE(item, '$."data.user_domain"') as data_user_domain,

JSON_VALUE(item, '$."data.user_email"') as data_user_email,

JSON_VALUE(item, '$."data.signal_name"') as data_signal_name,

JSON_VALUE(item, '$.session_id') as session_id,

CAST(JSON_VALUE(item, '$.session_id_count') AS INT64) as session_id_count,

CURRENT_TIMESTAMP() as updated_at

FROM (

SELECT

JSON_QUERY(`<your-project-id>.<your-dataset-id>.<your-table-id>.fetch_hardal_data`(

'<your-report-table-name>'

), '$.data') as data_array

),

UNNEST(JSON_QUERY_ARRAY(data_array)) as item;

🎊 Congratulations! You have successfully imported your Hardal first-party data to BigQuery.